Note: This report was originally published at Bright Side of News* on April 8, 2010. After their server crashed, BSN* has not yet been able to recover the article after several weeks. We are reposting the report here to serve as a mirror of the original article. There are likely to be minor editing differences with the BSN* article.

Note 2: Only a month or two after it was published, a detailed report that I wrote was wiped out during a BrightSideOfNews* hard drive crash. That exhaustive report, praised by many throughout the industry as the finest of its kind yet produced, examined the emerging and inevitable ARM versus x86 clash.

It took a little while and cost BSN* a lot of money to recover the data on the hard drive, but that report is now back up and can be read here.

I’m currently working on a followup to that bit of analysis that will include even more hardware than the initial report. I’m still waiting on a vendor or two, so I can’t promise an ETA yet, but one thing I can state is that the new report will be very interesting.

The computing landscape is changing rapidly and the war between x86 and ARM microprocessors is now underway. The competitors have dramatically different strengths and weakness, making for a particularly exciting confrontation.

Most importantly, the results of this war will have profound effects well beyond the CPU market, where several companies will possibly see their fortunes upended. One thing is absolutely certain: computing will never be the same again.

Introduction

In this report we will discuss the emerging competition between ARM and x86 microprocessors. Led by the Intel Atom, x86 chips are quickly migrating downwards into embedded, low-power environments, while ARM CPUs are beginning to flood upwards into the more sophisticated and demanding market spaces currently owned by x86 processors. The central focus of this report will be an extensive compute performance comparison between the ARM Cortex-A8 versus the new Intel Atom N450, the new VIA Nano L3050 and, for historical perspective, an old AMD Mobile Athlon based upon the Barton core. The Apple iPad A4 system-on-chip (SoC) is reportedly equipped with a 1GHz ARM Cortex-A8.

The Coming War: ARM versus x86

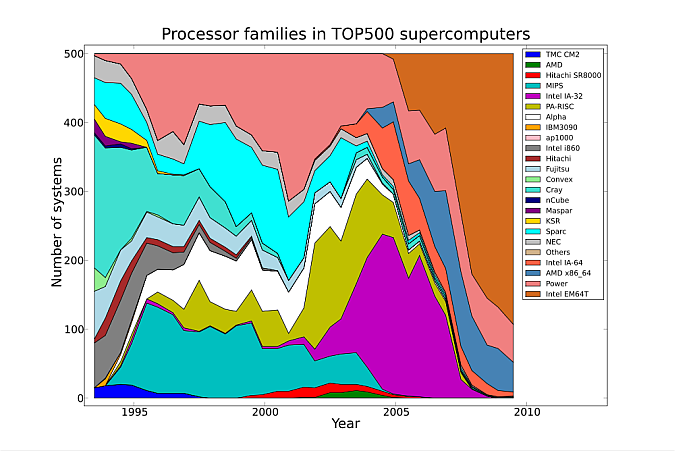

Over the last few years a war has been brewing. Two armies have been massing troops in their respective strongholds. Inside desktops, notebooks, servers and now even reaching into mainframes and supercomputers, the x86 family of microprocessors has mercilessly driven all competitors to extinction.

The “x86” moniker refers to the descendents of the 16-bit Intel 8086. Its 8-bit little brother, the Intel 8088, was the chip that powered the first IBM PC back in August, 1981. Shockingly primitive by today’s standards, the 8088 spoke a computer dialect that is still understood by the most modern, powerful and successful CPUs from Intel, AMD and VIA.

The roll call of those vanquished by the x86 family include microprocessors from IBM, DEC, Motorola, HP, Sun, Silicon Graphics, Commodore and even rivals from within Intel itself. Resistance has been futile. Eventually, even persistent holdout Apple succumbed to the relentless performance advances of the x86 juggernaut, dumping IBM’s Power architecture for the safety and reliability of x86 microprocessor advancements. Almost like clockwork, x86 CPUs double in capability every 18 months while prices continue to slowly decline.

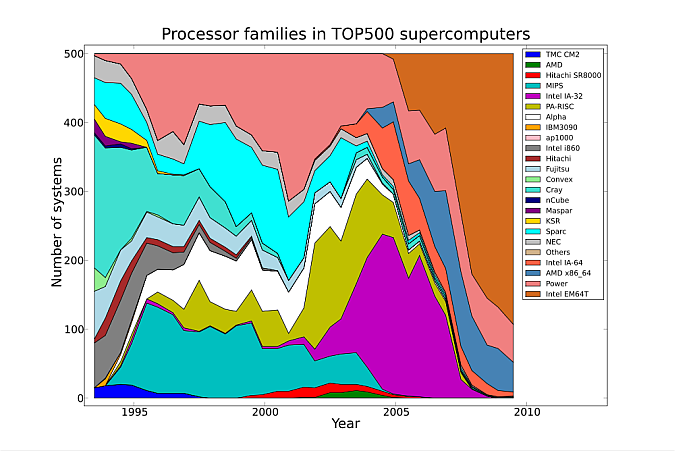

x86 microprocessors, including AMD x86-64 and Intel EM64T, have taken over supercomputing. [Image taken from: http://en.wikipedia.org/wiki/File:Processor_families_in_TOP500_supercomputers.svg]

Yet, almost silently, a stealthy opponent has built up forces within the modest confines of PDAs, calculators, routers, media players, printers, GPS units and a plethora of other embedded devices but most notably mobile phones. Based in Cambridge, England, ARM Holdings dominates 32-bit microprocessor sales despite its very low profile. While AMD, a microprocessor vendor that commands about one-fifth of the x86 market, celebrated the sale of their 500-millionth CPU last July in their 40th year of operation, there were nearly 3-billion ARM chips shipped in 2009 alone.

The history of ARM microprocessors is almost as long as that for x86 CPUs. Sometimes called the “British Apple,” Acorn Computers began in 1978 and created a number of PCs that were very successful in the United Kingdom including the Acorn Electron, the Acorn Archimedes and the computer that dominated the British educational market for many years, the BBC Micro.

As the Commodore produced, 2 MHz MOS Tech 6502 microprocessor that powered the BBC Micro grew long-in-the-tooth, Acorn realized it needed a new chip architecture to compete in business markets against the IBM PC. Inspired by the Berkeley RISC project which demonstrated that a lean, competitive, 32-bit processor design could be produced by a handful of engineers, Acorn decided to design its own RISC CPU sharing some of the most desirable attributes of the simple MOS Tech 6502.

Officially begun in October, 1983, the Acorn RISC Machine project resulted in first silicon on April 26, 1985. Known as the ARM1, the chip worked on this first attempt. The first production product, the ARM2, shipped only a year later.

In 1990, Acorn spun off its CPU design team in a joint venture with Apple and VLSI under a new company named Advanced RISC Machines Ltd, which is now an alternative expansion of the original “ARM” acronym. While Acorn Computers effectively folded over ten years ago, its progeny, ARM Holdings is stronger than ever and dominates the market for mobile phone microprocessors.

Contrary to x86 chipmakers Intel, AMD and VIA, ARM Ltd does not sell CPUs, but rather licenses its processor designs to other companies. These companies include NVIDIA, IBM, Texas Instruments, Intel, Nintendo, Samsung, Freescale, Qualcomm and VIA Technologies. Late last year, AMD spin-off GlobalFoundries announced a partnership with ARM to produce 28-nanometer versions of ARM-based system-on-chip designs.

The ARM Cortex-A8 versus x86

Like the Intel Atom, the ARM Cortex-A8 is a superscalar, in-order design. In other words, the Cortex-A8 is able to execute multiple instructions – in the case of the Atom, up to two – during each clock tick, but can only execute instructions in the order they arrive, unlike the VIA Nano and all current AMD and Intel chips beside Atom. The Nano, for instance, can shuffle instructions around and execute them out-of-order to improve processing efficiency by about 20-30% beyond superscalar in-order chips.

The immediate predecessor of the Cortex-A8 is the ARM11 which found a home in the original Apple iPhone and countless other smartphones. The ARM11 is a simple, scalar, in-order microprocessor, so the best it can ever do is execute one instruction per clock cycle. As the Cortex-A8 is roughly equivalent to the Intel Atom, the ARM11 is somewhat similar to the VIA C7.

In-order chips suffer a performance hit because processing can come to a screeching halt when an instruction is encountered that takes a long time to complete. On the other hand, out-of-order chips can shuffle instructions around so that forward progress can usually be made while a lengthy instruction is simultaneously processed.

The Intel Atom manages to partially overcome this problem by implementing HyperThreading, Intel’s brand name for its version of symmetric multithreading (SMT). Like a few other Intel CPUs (and the three IBM PowerPC-based cores in the Xbox 360’s Xenon), the operating system (OS) views the Atom as if it has more processing cores than it actually does. In the case of the single core Atom N450, the OS sees two “virtual” cores. The operating system will accordingly distribute a thread (independently running task or program) to each core at once. Consequently, the Atom often churns through two unrelated instruction streams simultaneously, so even if one gets blocked by a slow, “high latency” instruction, the other thread can usually still be processed.

While HyperThreading doesn’t help much on single threaded tasks – and a vast amount of modern computing remains single-threaded – HyperThreading helps a great deal with slow input/output (I/O) intensive instruction streams since I/O operations can take an eternity from the CPU’s vantage point and can block even an out-of-order core. For instance, the Atom boots Windows 7 relatively quickly compared with even superscalar, out-of-order, single-core chips like the VIA Nano because the Atom can continue processing a second thread and does not have to frequently stop and wait on the vast number of I/O operations encountered during boot-up.

Intel chose to equip the Atom with HyperThreading instead of making the chip out-of-order because HyperThreading is simpler and consumes less power. Intel’s Austin design team created the Atom especially for low-power environments.

However, the benefits of HyperThreading diminish when multiple cores are available. The newer ARM Cortex-A9 MPCore is designed to be deployed in two or more cores, so SMT is not as important under multi-core conditions. For instance, the new NVIDIA Tegra 2 boasts two ARM Cortex-A9 MPCore processors. Moreover, the A9 is superscalar, and out-of-order with speculative execution, putting it on equal footing with the newer x86 chips, at least superficially.

Keep in mind that modern x86 microprocessors tend to be very rich in execution units and, after decades of development, are extremely refined in terms of low instruction latencies and feature sets. Perhaps most importantly, the supporting x86 “ecosystems” are unmatched. “Ecocsystem” is the current buzzword that refers to the surrounding chip set, memory, I/O, interconnect and peripheral infrastructure.

Moreover, ARM chips are RISC cores which have reduced instruction sets. In fact, RISC is an acronym for “Reduced Instruction Set Computer” and ARM CPUs typify this genre in many ways.

In general, RISC chips are leaner and usually support fewer instructions than CISC or “Complex Instruction Set Computer” microprocessors. While today’s x86 CPUs wield a decidedly CISC-style instruction set, the underlying hardware has absorbed most of the advantages of RISC while implementing many complex instructions in microcode. For instance, the VIA C3 bolted a CISC x86 frontend over a very MIPS-like RISC core.

An issue to watch out for when comparing ARM CPUs against x86 microprocessors is the size of binary files. In the past, RISC machines have produced larger executables because more instructions are often necessary than with CISC-derived systems. If binary sizes differ significantly, this places greater pressure on cache sizes, RAM size and memory bandwidth. With today’s terabyte-scale mass storage devices, increased binary bloat is not significant since the vast majority of drive space is consumed by video and other multimedia data.

| Binary size comparison |

ARM |

x86 |

| STREAM |

112.3% |

100.0% |

| miniBench |

115.1% |

100.0% |

| CoreMark |

107.3% |

100.0% |

The table above shows that ARM Cortex binaries are indeed larger than x86 binaries, but the difference is only about 10-15 percent. If this sampling is representative for both platforms, binary size differences will rarely matter. ARM L1i and L2 caches should minimally be as large as those found on x86 microprocessors, but that is not currently the case, as will be discussed shortly.

ARM representatives responded with the following:

The binary size of the ARM benchmarks is significantly lowered with the Thumb-2 hybrid instruction set. Expected results are 20-30% lower code size at equivalent or better performance. The 10.0x version of Ubuntu Linux has been optimized for Thumb-2. (the version as tested was Ubunu 9.04)

Of course, the real story in the battle between ARM and x86 is how they measure up against each other in the performance arena. In this report, we’ll take a close look at competitive performance across a broad range of tests and also take a peek at power usage.

Benchmarking considerations

Normally it is a primary prerequisite to insure that all systems under test have been configured identically prior to benchmarking. Unfortunately, this is impossible to achieve in this report given the highly integrated nature and grossly dissimilar “ecosystems” of ARM versus x86 microprocessors. For instance, the Freescale i.MX515 system that we used in our tests only supports DDR2-200 32-bit memory, much slower than the VIA Nano L3050 system’s DDR2-800 64-bit memory. Worse, the i.MX515’s integrated video solution is far more limited, maxing out at 1024×768 at 16-bit color depth, than the graphics solutions on any of the x86 systems.

Given this rigidly set, unlevel playing field, we deployed a battery of benchmarks that run primarily within the CPU’s caches. In other words, we made an attempt to only measure CPU-bound performance.

We verified the CPU sensitivity of each test by increasing the clock speed of the VIA Nano from 800MHz to 1800MHz. Tests should scale closely to the clock speed ratio of 225 percent.

| Benchmark |

scaling |

| Hardinfo |

226% |

| Peacekeeper |

209% |

| Google V8 |

272% |

| SunSpider |

228% |

| miniBench |

220% |

| CoreMark |

225% |

| stream add |

108% |

As shown in the table above, all of the benchmarks scaled appropriately with the exception of Google V8 and Stream Add, a memory bandwidth test that is constrained by memory performance and is included here as a counter example. Benchmarks that scale superlinearly (superlinear: to increase at a rate greater than can be described with a straight line) like Google V8 usually are not good benchmarks. Indeed, Google V8 also demonstrated very large run-to-run variations on several tests like EarlyBoyer and RegExp. Nevertheless, we have included full Google V8 results since it remains a popular JavaScript benchmark.

Speaking of run-to-run variation, we ran each test at least three times and calculated the coefficient of variation (CV) to insure result validity.

For this report, we placed four CPUs under test: the 800MHz Freescale i.MX515 which is based upon the ARM Cortex-A8, the new VIA Nano L3050 downclocked to 800MHz, the new Intel Pineview-based Atom N450 downclocked to 1GHz and, for historical perspective, an 800MHz Mobile Athlon (Barton core).

Unfortunately, it was impossible to downclock the 1.67GHz Atom N450 below 1GHz, but, as you will see, the results we obtained are still very interesting. The Atom N450 introduces an on-die GPU which significantly reduces overall platform power consumption compared with the older Silverthorne-based Atom platforms.

I purchased a Gateway LT2104u netbook from Best Buy for this report in order to test the Intel Atom N450. The Gateway is a very well executed netbook design with a solid feel, attractive appearance, excellent battery life and good feature set.

The VIA Nano L3050 is the new, second generation, “CNB” Nano that boosts performance from 20-30 percent beyond the original “CNA” Nano, while also reducing power demands by similar amounts. The CNB-based Nano is still based upon the same 65nm Fujitsu process leveraged with the original CNA-based VIA Nano. Despite these improvements, the CNB Nano die-size is almost identical to its predecessor’s at around 62-64 square millimeters.

The table below summarizes relevant system details.

|

Freescale i.MX515 (ARM Cortex-A8) |

Mobile Athlon (Barton) |

VIA Nano L3050 |

Intel Atom N450 |

| L1i |

32 kB |

64 kB |

64 kB |

32 kB |

| L1d |

32 kB |

64 kB |

64 kB |

24 kB |

| L2 |

256 kB |

512 kB |

1,024 kB |

512 kB |

| frequency |

800 MHz |

800 MHz |

800 MHz |

1,000 MHz |

| memory speed |

DDR2-200 MHz (32-bit) |

DDR-800 MHz |

DDR2-800 MHz |

DDR2-667 MHz |

| operating system |

Ubuntu 9.04 |

Ubuntu 9.04 |

Ubuntu 9.04 |

Jolicloud (Ubuntu 9.04) |

| gcc |

4.3.3 |

4.3.3 |

4.3.3 |

4.3.3 |

| Firefox |

3.5.7 |

3.5.7 |

3.5.7 |

3.5.7 |

All systems ran Ubuntu Linux Version 9.04 with the exception of the Atom netbook where we had to install Jolicloud Linux because of video driver issues. However, Jolicloud is based upon Ubuntu 9.04, so programs installed from the Ubuntu repositories were identical.

We chose Ubuntu 9.04 because the ARM-based Pegatron nettop we used in this report came with Ubuntu 9.04 preinstalled. An attempt to upgrade that box to the latest version of Ubuntu failed due to insufficient disk space. The Pegatron device was equipped with a 4GB flash drive.

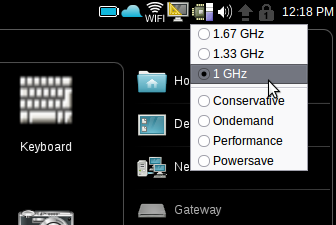

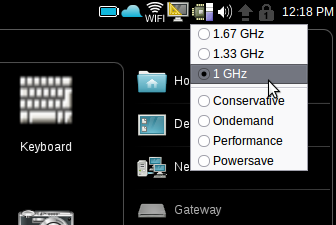

We undersclocked the 1.8GHz VIA Nano L3050 to 800MHz by using the CPU multiplier setting in the Centaur reference system’s BIOS. We verified the proper clock speed by reading MSR 0x198. For the Atom N450 Gateway netbook, we underclocked the Atom to 1GHz using the Gnome CPU Frequency Monitor taskbar applet. This handy applet does not support the VIA Nano yet.

We used the Gnome CPU Frequency Monitor applet to set the Atom’s clock speed to 1GHz.

For JavaScript tests, all systems ran Firefox version 3.5.7. It is very important to use the same browser version for JavaScript tests because performance can vary tremendously from browser to browser or even version to version of the same browser.

We thank C.J. Holthaus and Glenn Henry from Centaur Technology for the VIA Nano L3050 reference board, and Katie Traut and Phillipe Robin from ARM for the tiny, Ubuntu based Freescale i.MX515-based Pegatron prototype system.

The Pegatron “nettop” is only slightly larger than a CD case yet it boasts a full complement of features including 512MB of DDR2-200MHz memory (32-bit interface), a VGA connector, wireless “N” networking, Bluetooth 2.1 + EDR, a flash memory card reader, and audio, headphone, Ethernet and USB ports. Total system power usage rarely rises much above 6 Watts.

Unless specified otherwise, all benchmark results are reported so that larger numbers correspond to better performance. Many tests have been “normalized” against the ARM Cortex-A8 so that results are reported in terms of the performance ratio with the Cortex-A8. For instance, if the Atom is twice as fast at the Cortex-A8 on a certain test, it will score 2.00.

A gander at memory subsystem performance

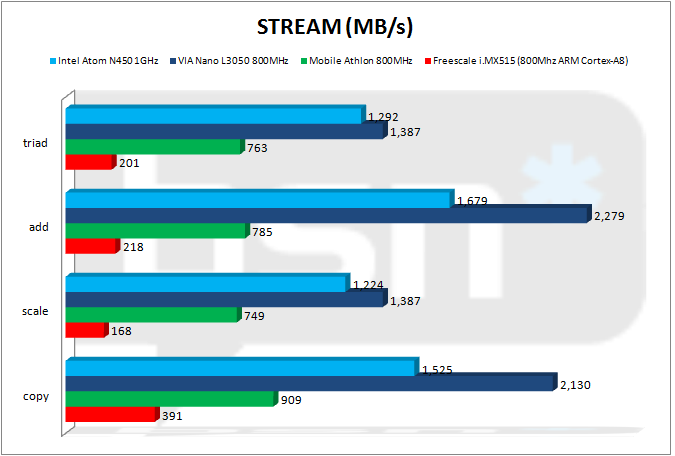

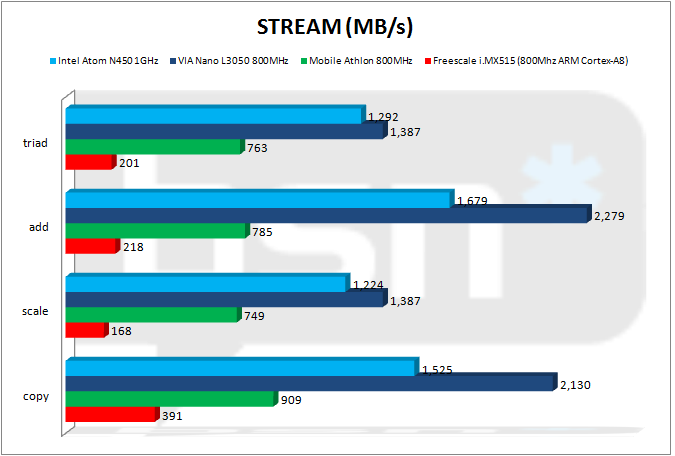

As mentioned earlier, the memory subsystems vary significantly among these dissimilarly configured systems. The ARM Cortex-A8 struggles with it very weak DDR2-200MHz, 32-bit memory.

Nevertheless, memory bandwidth results are important because they underscore a handicap that ARM must eventually address. ARM systems have typically been optimized for extreme low-power environments while x86 systems have been aggressively optimized for performance. A sacrifice made in the Freescale i.MX515 is memory speed exchanged for low power usage, but this absolutely destroys performance on many types of tasks as exemplified by our STREAM results.

As can be seen in the graph above, the ARM Cortex-A8 as part of the Freescale i.MX515 struggles against even the ancient AMD Athlon and is creamed by the VIA Nano and the Intel Atom. While part of the problem is its pokey memory, another component is the ARM chip’s meager 32-bit memory interface, half the width used for single-channel memory access by x86 chips. If the Cortex-A8 were equipped to access DDR2-800 memory through a 64-bit interface, it might very well keep up with its x86 rivals in terms of memory bandwidth.

For this report, ARM representatives explained the design decisions behind the Freescale i.MX515 used in our Pegatron prototype:

The ARM ecosystem is centered on a “right-sized” computing philosophy. ARM Partners design their SoCs to a particular set of applications, enabling the best tradeoff for power, cost and performance for a given application. The Freescale i.MX51 was designed for a particular application class, with the memory subsystem designed for the needs of these applications. It is understandable that the performance of this memory subsystem will be different from platforms targeted at general purpose computing applications.

Incidentally, the VIA Nano can also be configured to support 32-bit memory access. This is desirable in severely space constrained environments where trace and pin counts adversely impact package and PCB implementation size.

Integer Performance

Although it might not always appear to be the case, all computing is the processing of numbers. From the words of a love letter, to the glistening dew drops on a rose, to Johnny Cash’s rumbling, anguished, repentant voice, to Gordon Freeman’s apocalyptic universe, to the ruby slippers on Dorothy’s feet, all are simply numbers to a computer.

For most chores, the only numbers that matter are integers. Integers are the natural counting numbers like 1, 2, 3 and their negative counterparts plus zero. With the exclusion of 3D gaming and some types of video and still image rendering, encoding and manipulation, the vast bulk of day-to-day computing is integer-based. The integer test results we look at here can give us insight into typical system performance across chores like word processing and web browsing.

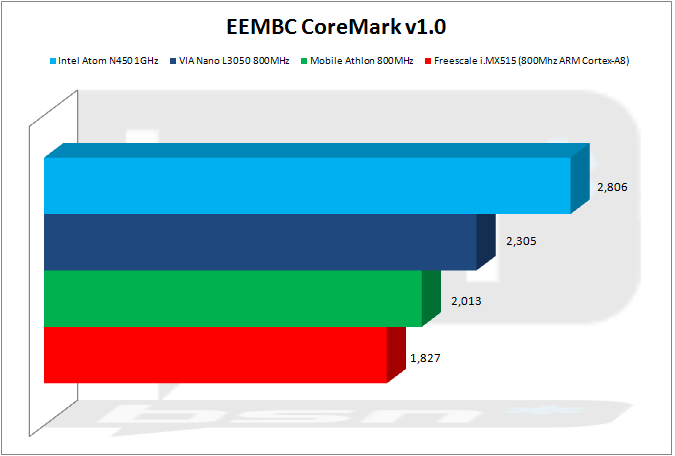

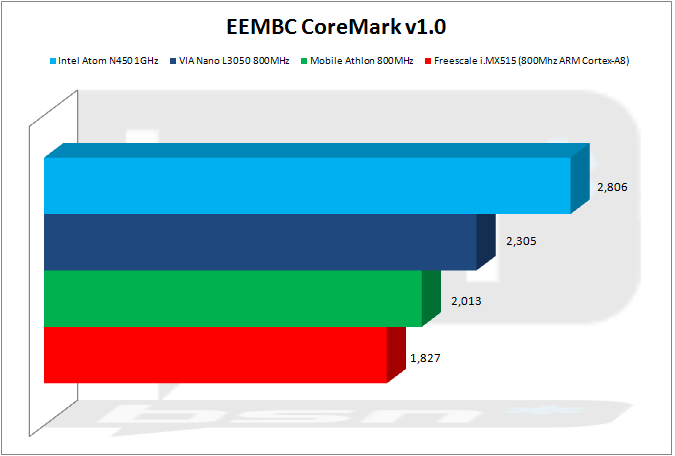

The Embedded Microprocessor Benchmark Consortium (EEMBC) recently released a benchmark that is freely available to anyone. Dubbed “CoreMark,” this test provides a quick way to compare CPU performance across entirely different processor architectures.

We compiled CoreMark on each platform using GCC version 4.3.3 and the following flags:

-O3 -DMULTITHREAD=4 -DUSE_FORK=1 -DPERFORMANCE_RUN=1 -lrt

We chose to generate four threads to insure scaling across a variety of systems featuring multiple cores and/or HyperThreading like the Intel Atom.

As you can see from the graph above, the ARM Cortex-A8 is very competitive on EEMBC CoreMark, running almost as fast as the Athlon and Nano. The Atom pulled ahead thanks to HyperThreading combined with its 25 percent clock speed advantage over the other chips. Unfortunately, there aren’t many more overall wins for the Atom ahead; please note, however, that most of the remaining tests are single-threaded.

“miniBench” is a diverse benchmark that I’ve been working on for several years. It’s part of my OpenSourceMark benchmarking project. miniBench contains a wide variety of popular tests and runs quickly from the command-line. I also have a GUI-based version that I wanted to use for this report but could not do so because the Qt tool chain would not install completely on the ARM system. Instead, I used the excellent and relatively lightweight Code::Blocks IDE to create and manage the necessary C++ project files for a command-line binary.

You can download the x86 Code::Blocks project here. An x86 Linux binary compiled with static libraries is here. A similar ARM Cortex-A8 Linux binary is here. Both the x86 Linux project and the ARM Cortex-A8 project will eventually be uploaded to the OpenSourceMark SourceForge page, along with GUI adaptations of these benchmarks.

The ARM Cortex-A8 struggles on three of the five tests in this first miniBench chart. Heap Sort is the worst result for the A8 and this is almost certainly because the test appears to be significantly impacted by memory bandwidth. The i.MX515 system is saddled with very poor bandwidth as already demonstrated in this report. Integer Matrix Multiplication is another memory bandwidth sensitive test where the ARM chip comes up short.

However, the ARM Cortex-A8 is extremely impressive on the Integer Arithmetic test, blowing away the Athlon and doubling the Atom’s performance. The Integer Arithmetic test does exactly what you’d expect it to do: it performs a large number of very simple integer arithmetic calculations.

Also notice that the 800MHz ARM Cortex-A8 beats the 1GHz Intel Atom N450 on the ubiquitous Dhrystone benchmark despite the fact that the ARM chip spots the Atom a 25 percent clock speed advantage. ARM advertises that we should be able to get 1,600 Dhrystone MIPS from an 800MHz Cortex-A8. On our tests, the 800MHz ARM Cortex-A8 achieved 1,680 Dhrystone MIPS.

It’s clear that the ARM Cortex-A8 is aggressively optimized for Dhrystone performance, a fact borne out by the fact that ARM touts the chip’s Dhrystone throughput.

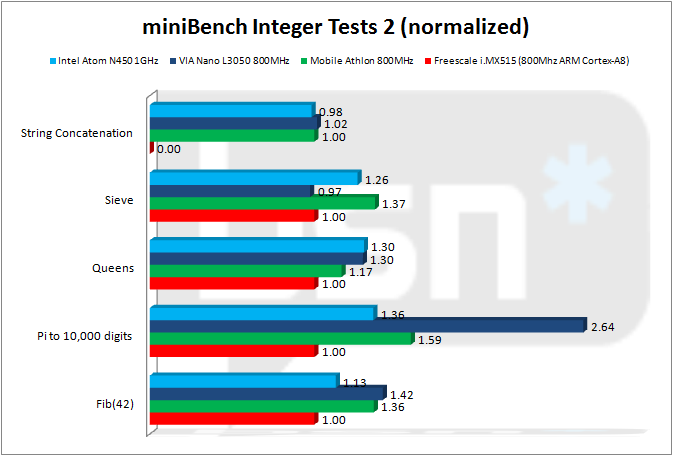

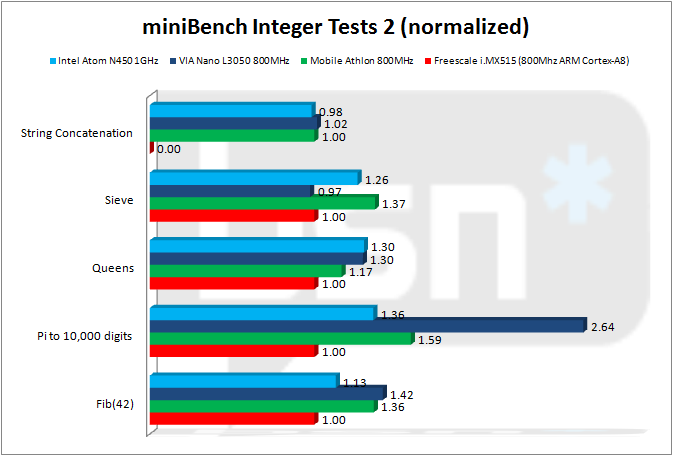

On the second set of miniBench integer tests, the ARM Cortex-A8 holds its own against the brawnier x86 CPUs. The ARM Cortex-A8 even beat the VIA Nano L3050 on the Sieve test. More remarkably, the Cortex-A8 is very close to parity with the Atom across all of these tests, save for one, if the Atom’s 25 percent clock speed advantage is considered.

Notice, though, that the ARM chip could not run the String Concatenation test. This is an important indication of the relatively immature state of ARM’s Linux/GNU software support. Ubuntu as a whole was often flakey. Doubtlessly, this will improve with time.

The VIA Nano L3050 obliterates all of the competition on the hashing tests because the Nano features hardware support for these important security functions.

However, the 800MHz ARM Cortex-A8 is amazingly good at hashing and thoroughly beats the 1GHz Atom on both tests and is only slightly slower than the Athlon.

The VIA Nano L3050 enjoys its biggest triumph on the miniBench cryptography tests because the Nano is equipped with robust hardware support for AES ECB encryption and decryption.

Again, the ARM Cortex-A8 remains very close to the Intel Atom if the Atom’s 25 percent clock speed advantage is considered.

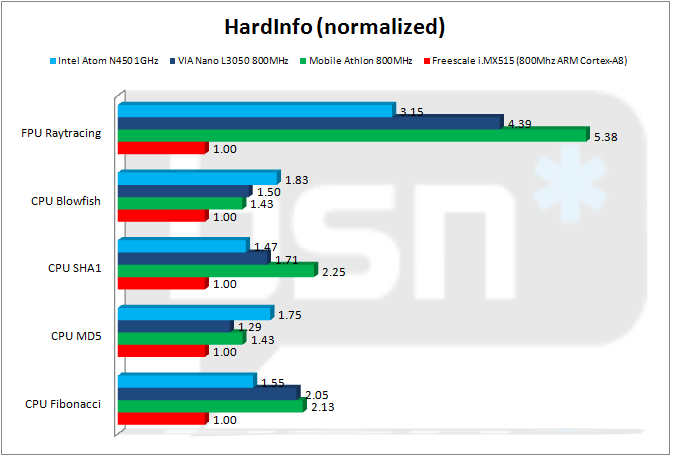

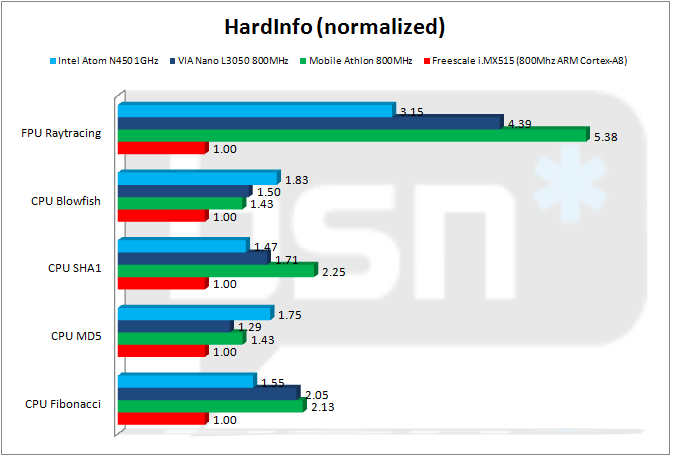

HardInfo is one of the few CPU benchmarks available from within Ubuntu’s repositories.

The ARM Cortex-A8 doesn’t perform quite as well on HardInfo as it did on miniBench, possibly because I used very aggressive optimization flags for both platforms when compiling miniBench. Nevertheless, the ARM Cortex-A8 stays within spitting distance of the x86 CPUs except on the FPU Raytracing test which is not an integer test but rather a floating-point test.

Floating-point performance is the ARM Cortex-A8’s Achilles ’ heel as we will see in the next section.

Floating-point performance

Gaming, scientific computing, certain spreadsheets like financial simulations and some image and video manipulation tasks involve fractional and irrational numbers. Called “floating-point” because the decimal or radix point can float around among the significant digits of a number, floating-point performance has become increasingly important in modern computing.

However, good floating-point performance is relatively hard to engineer and requires a substantial number of additional transistors. Of course, this drives up power usage. Typically, floating-point intensive operations consume more power than pure integer tasks. In fact, miniBench’s LinPack test was the worst case power consumer on the VIA Nano. Centaur discovered this while I worked there as head of benchmarking. However, this does not include “thermal virus” programs like the absolute worst case program developed by Glenn Henry, Centaur’s president.

Integrated floating-point (FP) hardware is a fairly new addition to ARM processors and even though the Freescale i.MX515 ARM Cortex-A8 features two dedicated floating-point units, there are still severe limitations. The faster of the two FP units is the “Neon” SIMD engine, but it only supports 32-bit single-precision (SP) numbers. Single-precision numbers are too imprecise for many types of calculations.

Hardware support for 64-bit, double-precision, floating-point calculations is provided by the “Vector Floating-Point” (VFP) unit, a pretty weak coprocessor. And despite being called a “vector” unit, the VFP can only really operate on scalar data (one at a time), although it does support SIMD instructions which helps improve code density.

Oddly enough, during our performance optimization experiments, Neon generated the same level of double-precision performance as the VFP, while doubling the VFP’s single-precision performance. When we asked ARM about this, company representatives replied, “NEON improves FP performance significantly. The compiler should be directed to use NEON over the VFP.”

We therefore compiled miniBench to leverage Neon for this report. Note that while the Neon compiler flag was used for the ARM chip, none of the tests are explicitly SIMD optimized – the x86 version of miniBench used in this report does not include hand-coded SSE or SSE2 routines and the ARM Cortex-A8 version of miniBench does not include similar Neon code.

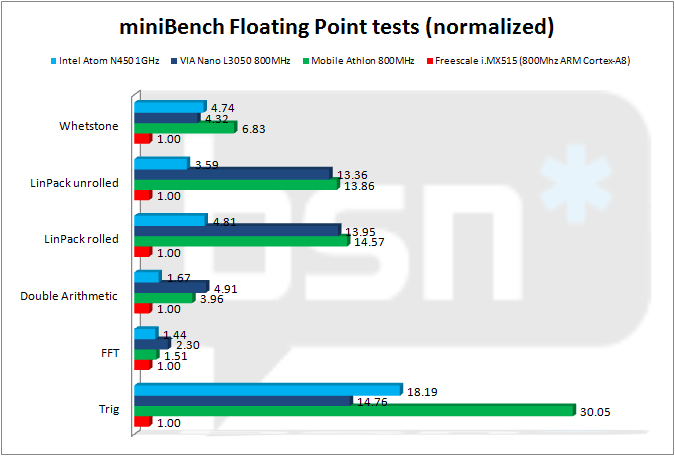

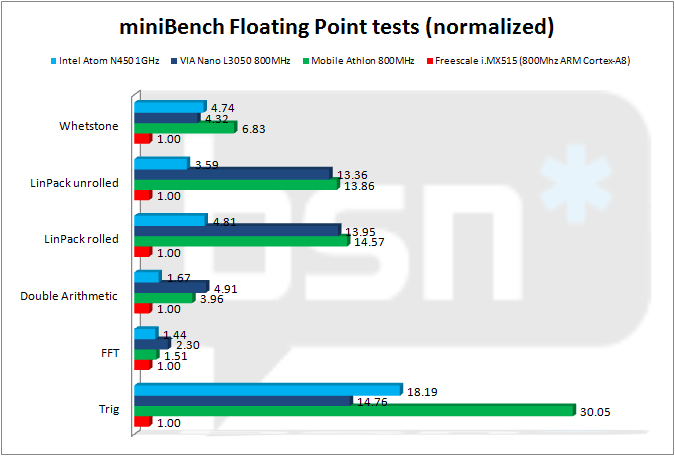

In the miniBench MFLOPS tests, the ARM Cortex-A8 looks pretty bad except on division.

While the VIA Nano has the best DP (double-precision) performance, note how well the Intel Atom N450 handles SP calculations.

It is also worthwhile to recognize the very good floating-point division performance of the ARM Cortex-A8’s Neon. Unlike all of the x86 chips that I have ever tested, the Cortex-A8 delivers identical throughput for both floating-point division and multiplication. Division is much slower on x86 processors than multiplication. Consequently, the Cortex-A8 keeps up very well with the x86 CPUs in this report on DP division, more than doubling the Atom’s performance when the Atom’s clock speed advantage is considered. In single-precision division, the ARM Cortex-A8 beats ALL of the x86 microprocessors it’s pitted against here.

The ARM Cortex-A8 continues to languish on the remaining miniBench floating-point tests with two notable exceptions. The Cortex-A8 is fairly strong on FFT calculations, an extraordinarily important algorithm for many, many tasks. The ARM chip is also competitive with the Atom on the Double Arithmetic test.

Observe how the old Barton-core Mobile Athlon demolishes all of the other chips on Trig. AMD has historically provided industry leading performance on transcendental calculations, while the same area has always been a big weakness for VIA’s CPUs. ARM really needs to bolster their chips’ performance on transcendental operations like the trigonometry functions exercised in this test.

The takeaway from this section is that the ARM Cortex-A8 does not deliver acceptable floating-point performance for netbooks, notebooks or desktops compared with x86 CPUs. This is an area ARM must address if the company plans to compete toe-to-toe with x86 microprocessors.

JavaScript performance

JavaScript performance has become very important as cloud-based computing has finally begun to take hold with the appearance of solutions like Google Apps, Zoho Office, Adobe’s Acrobat.com, Aviary and many more applications. The Google Android operating system largely foregoes native applications and leverages Web-based JavaScript programs. Jolicloud Linux takes a similar but less aggressive tack allowing native and cloud-based applications to seamlessly co-exist.

There are several widely used JavaScript tests that run across all of the CPUs examined in this report. However, it is very important to run these tests on the same browser across all platforms. Even specific browser version is also very important because JavaScript performance varies wildly from browser to browser and version to version as web browser developers push each other in a mad race to provide the fastest JavaScript engines.

Thankfully, Firefox 3.5.x is available for each system included in this report and we used it for these tests.

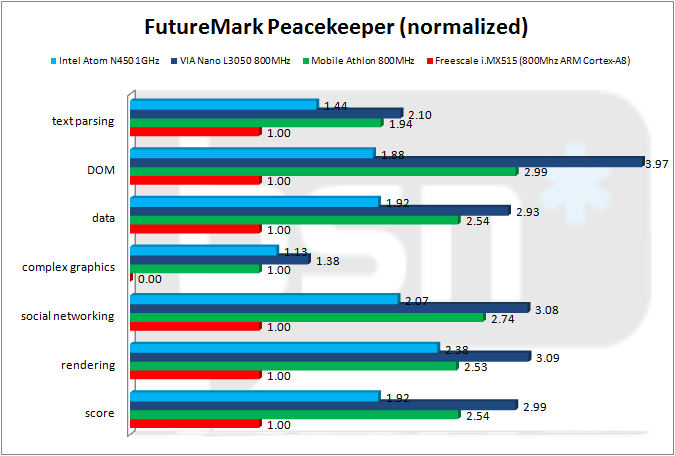

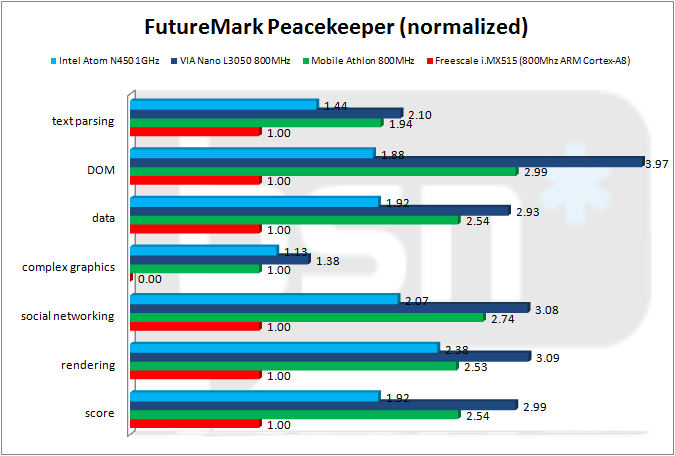

FutureMark, the maker of PCMark and 3DMark, has introduced its own JavaScript benchmark called Peacekeeper. FutureMark Peacekeeper is hands down the most elaborate JavaScript benchmark currently available, although it is difficult to assess its validity. PeaceKeeper is the only JavaScript test in our roundup that had complex graphical components.

The Freescale i.MX515 ARM system fared poorly against its x86 rivals across all Peacekeeper tests. This might be partially accountable to the slow main memory subsystem which saddled Cortex-A8. The i.MX515 Cortex-A8 only has 256kB of L2 cache compared to 512kB for the Athlon and the Atom and 1,024kB for the Nano, so it is much easier for a benchmark to spill out of the Cortex-A8’s L2 cache and into its extremely slow main memory.

ARM representatives agreed that the Cortex-A8’s poor showing on FutureMark Peacekeeper is most likely due its L2 handicap, perhaps making Peacekeeper, in the context of this report, more of a comparison of memory subsystems, not processors.

Note also that the ARM system failed to complete the Peacekeeper complex graphics test.

The VIA Nano L3050 was the clear winner of FutureMark’s PeaceKeeper, besting all of its rivals on every test. Even though the Intel Atom N450 was far behind the two other x86 chips, its overall score was nearly twice that of the ARM system. Again, keep in mind that the Atom also ran with a 25 percent clock speed advantage over the other chips in this comparison. Also be aware that JavaScript is not threaded, so the Atom’s HyperThreading engine won’t help it much on JavaScript tests.

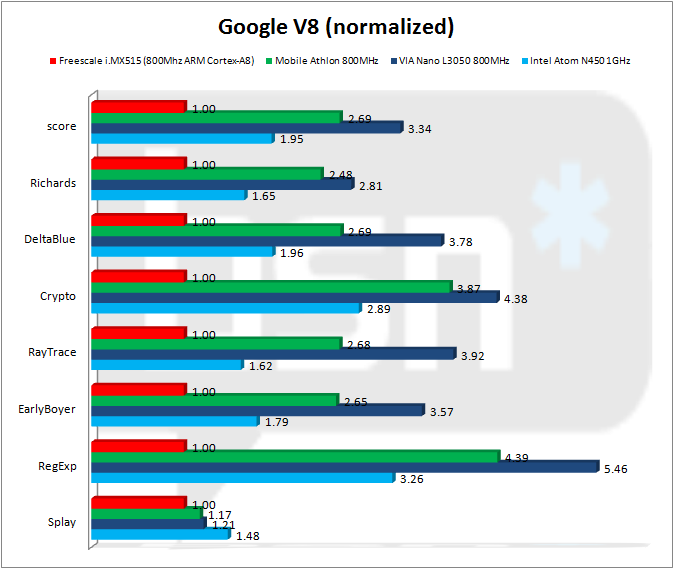

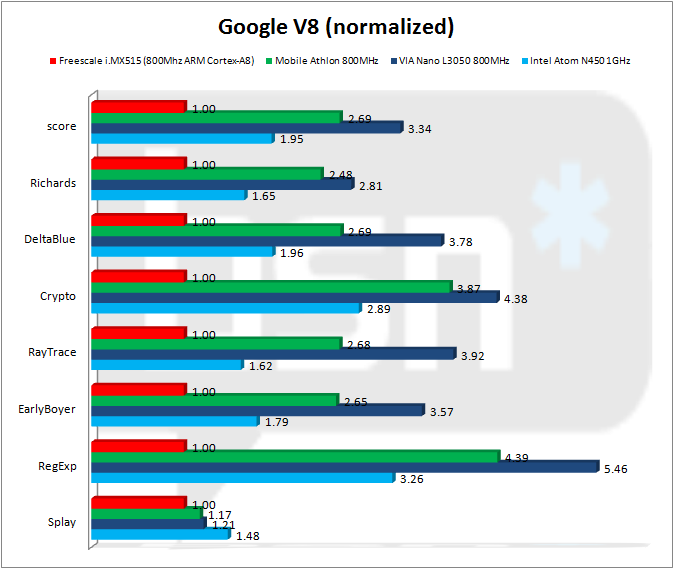

With Google in the lead of cloud-based computing efforts, it should not be surprising that the search engine giant also provides its own JavaScript benchmark. Unfortunately, the Google V8 benchmark does not behave like a very good benchmark at this point, demonstrating large run-to-run variation and superlinear scaling. Nevertheless, Google V8 is a popular JavaScript benchmark, so we included it here.

The Google V8 benchmark closely reproduced FutureMark Peacekeeper’s results. VIA’s Nano L3050 won every test by significant margins again. The Atom trailed the other x86 processors badly, but still nearly doubled the ARM Cortex-A8’s showing.

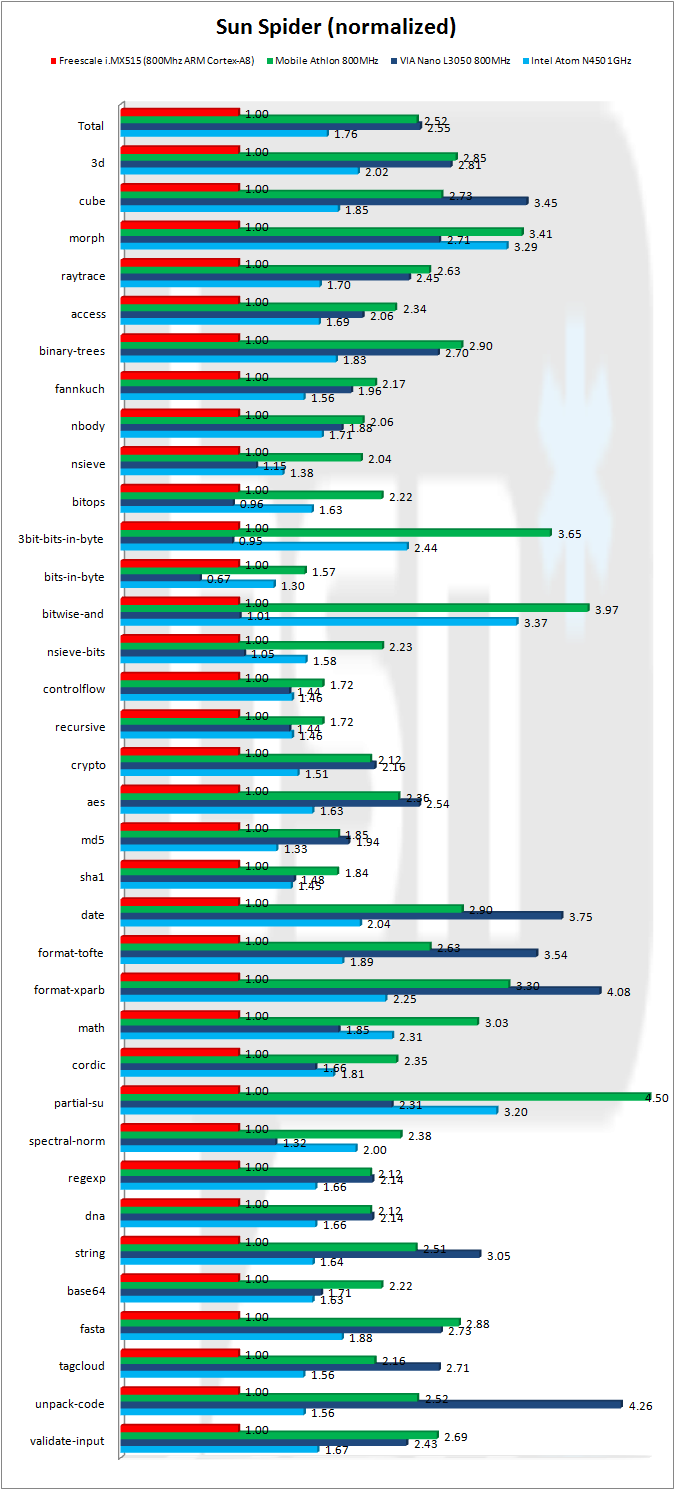

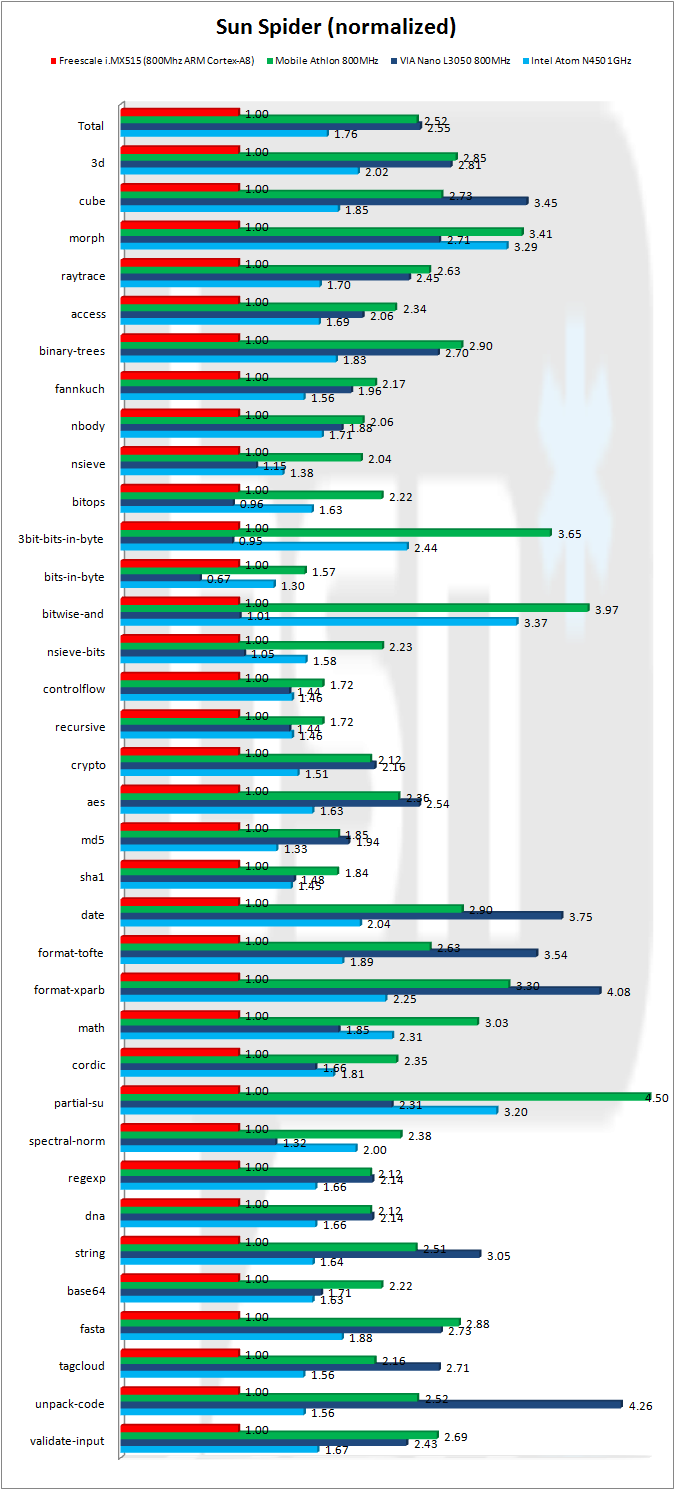

Our final JavaScript benchmark is SunSpider, perhaps the most popular JavaScript test in use today.

Again, the ARM Cortex-A8 does not look good, faring only slightly better than on the other two JavaScript benchmarks.

The VIA Nano L3050 barely pulls out an overall win, its score hurt by very poor performance on bit level operations. The ARM Cortex-A8 beats the Nano on two of these tests.

Despite its age, the AMD Mobile Athlon based on the Barton core has delivered competitive performance across nearly all tests.

I must state at this point that the JavaScript results do seem to reflect the relative, subjective, overall feel of the four systems. Despite its strong showing on many integer tests, the Freescale i.MX515-based Pegatron system feels much more sluggish than all three of the x86 systems; the Pegatron’s extremely slow memory subsystem doubtlessly contributes to this issue. The Atom N450 is also clearly more lethargic than either the AMD Mobile Athlon or the VIA Nano L3050 systems. The AMD and VIA systems are essentially indistinguishable during normal usage.

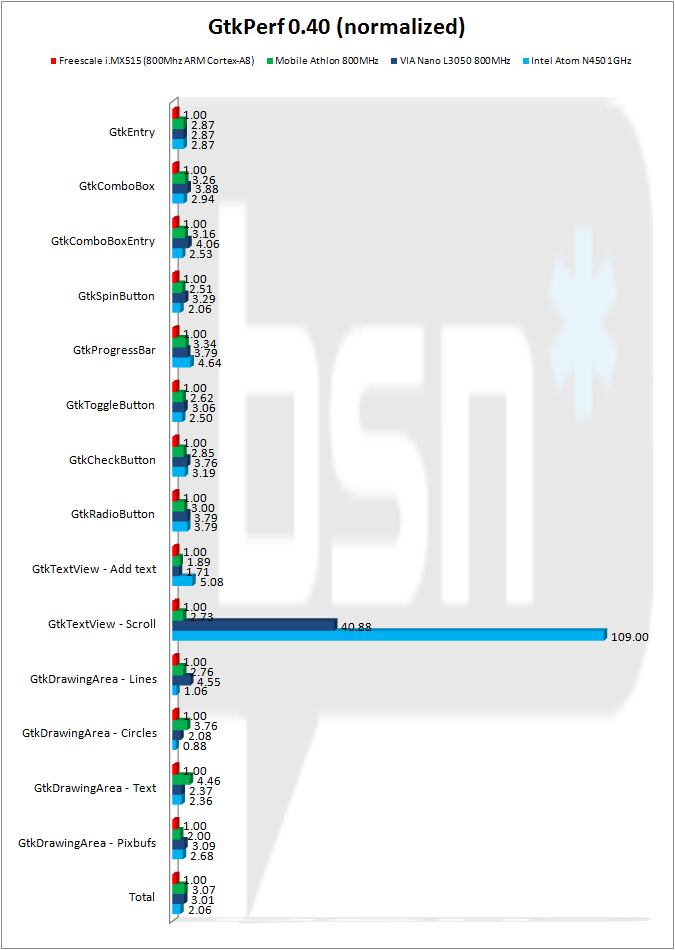

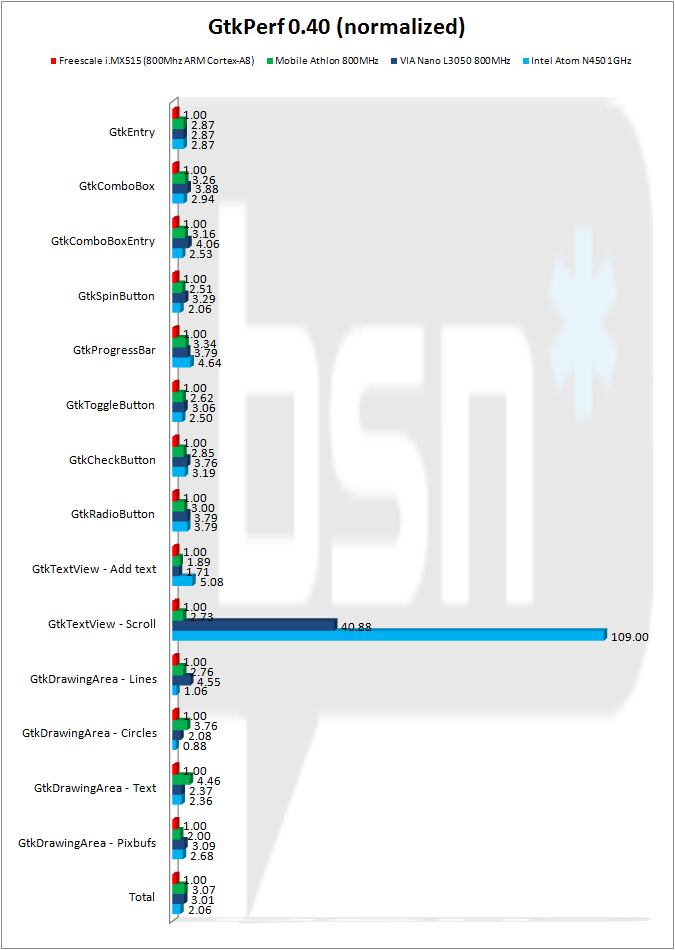

2D graphics performance

Take the following chart with a grain of salt because the video subsystems across the three systems are very dissimilar. The VIA, Intel and Freescale systems all used integrated graphics while the AMD system was equipped with a discrete NVIDIA NX6200 AGP card.

Even though the three x86 systems ran at 24-bit color depth, they were all two to three times faster than the ARM system that ran at only 16-bit color depth. We tested all systems at 1024×768 (XGA) resolution except the Atom, which we tested at the native panel resolution of 1024×600.

Power consumption

While the x86 microprocessors in this comparison enjoy a clear overall performance advantage, ARM CPUs are renowned for their power usage thriftiness. It is very difficult to compare power usage among the four CPUs under test for this report. The AMD and VIA systems are inappropriate for power comparisons because they are based on desktop hardware.

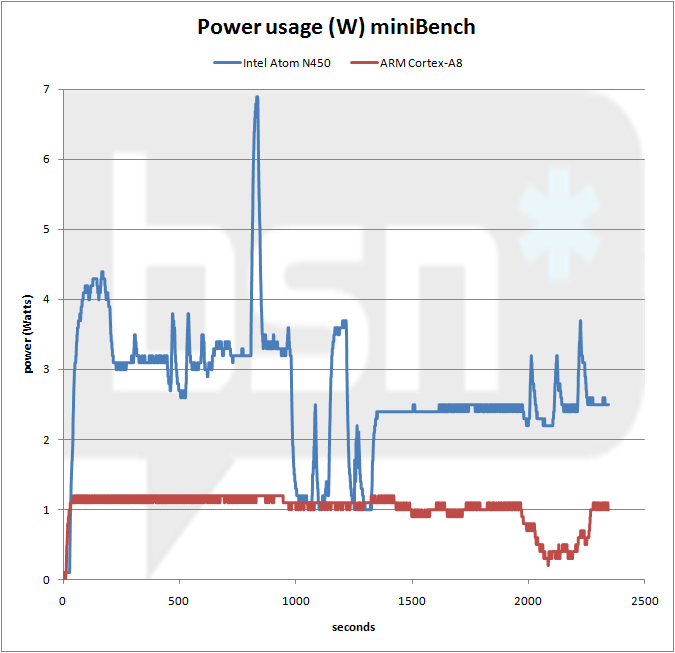

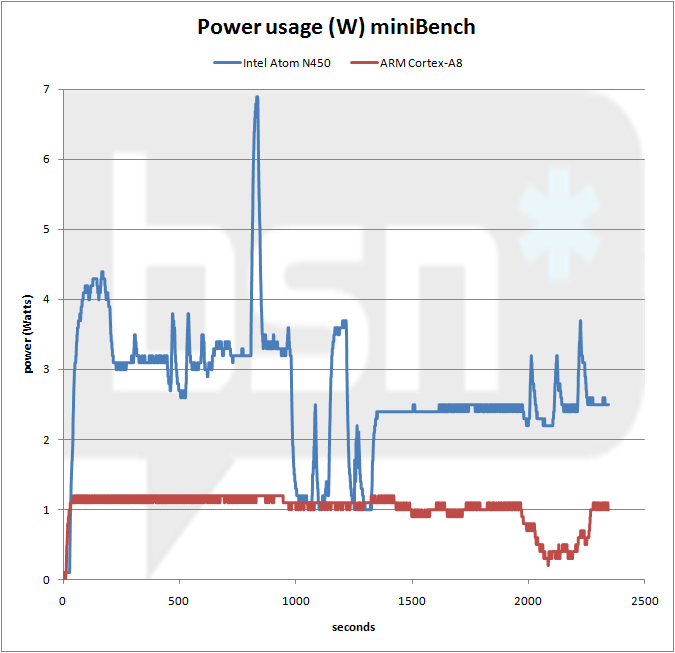

The chart below contrasts power consumption between the Intel Atom N450 and the ARM Cortex-A8 while running miniBench. The power curves were generated from system power usage adjusted downwards so that idle system power was discarded. For the Atom, idle power was 13.7W with the Gateway netbook’s integrated panel disabled while the idle power for the Pegatron system was only 5.4W.

Be aware that the Pegatron prototype does not implement many power management features. ARM representatives note:

The Pegatron development board was designed as a software development tool and does not have a commercial production software build so it does not have many of the power management features found in ARM-based mobile devices. Production systems would expect to have aggressive power management implemented, lowering the ARM power consumption.

Given this information, the results we show here likely represent an energy consumption condition considerably worse than would be encountered with a similarly configured, commercial, ARM Cortex-A8-based system.

Subtracting idle power usage should isolate the curves to the power necessary for running miniBench. Note that the Atom reached minimum power usage shortly after startup and never reached that level again. Idle power beyond that point is about 1 Watt higher. Even taking that into consideration, the Atom consumes at least three times the power of the ARM Cortex A8 on the same tests.

It’s particularly interesting to see how power usage compares on the AES tests where both CPUs deliver comparable performance. The first major hump on the Atom curve shows the power consumed on the AES tests. Compared with the ARM Cortex-A8, the Intel Atom N450 required about four times more power while delivering only about 30 percent additional performance – and this is with a 25 percent clock speed advantage.

The sharp peak in Atom power usage occurred on the miniBench floating-point memory bandwidth tests.

The Atom completes miniBench in about one-half the time needed by the ARM Cortex-A8 due to the ARM processor’s very poor floating-point performance. The first major dips in both curves (at 1000s and 2000s) indicate where the two systems complete the benchmark.

Even though floating-point hardware can draw a lot of power, FP units usually deliver significant energy savings because floating-point operations take much less time to complete with accelerated hardware support. Energy consumed for a task is: E = P * t, where “E” is for “Energy,” “P” is for “Power” and “t” is for “time.” Good floating point hardware might drive up power demands, but the time to complete FP operations is reduced enough to dramatically reduce the total energy needed for those operations.

Despite the fact that the ARM Cortex-A8 blows away the Intel Atom in power thriftiness, don’t belittle the Atom. It is a resounding success in terms of reducing the power demands of x86 microprocessors. The Intel Atom is currently the only realistic x86 system-on-chip (SoC) design ready to migrate downwards into smartphones.

Doubtlessly inspired by the VIA C7 — which explains why Intel set up shop in Austin, the same town where VIA’s Centaur design team is headquartered (in fact, a few ex-“Centaurians” worked on the Atom) – the Intel Atom delivers acceptable performance while sipping power at levels far lower than usually seen in the x86 world. Right now, there is no competing, low-power x86 CPU – let alone SoC – that can match the Atom in terms of performance per Watt, especially on multithreaded applications.

Conclusion

The ARM Cortex-A8 achieves surprisingly competitive performance across many integer-based benchmarks while consuming power at levels far below the most energy miserly x86 CPU, the Intel Atom. In fact, the ARM Cortex-A8 matched or even beat the Intel Atom N450 across a significant number of our integer-based tests, especially when compensating for the Atom’s 25 percent clock speed advantage.

However, the ARM Cortex-A8 sample that we tested in the form of the Freescale i.MX515 lived in an ecosystem that was not competitive with the x86 rivals in this comparison. The video subsystem is very limited. Memory support is a very slow 32-bit, DDR2-200MHz.

Languishing across all of the JavaScript benchmarks, the ARM Cortex-A8 was only one-third to one-half as fast as the x86 competition. However, this might partially be a result of the very slow memory subsystem that burdened the ARM core.

More troubling is the unacceptably poor double-precision floating-point throughput of the ARM Cortex-A8. While floating-point performance isn’t important to all tasks and is certainly not as important as integer performance, it cannot be ignored if ARM wants its products to successfully migrate upwards into traditional x86-dominated market spaces.

However, new ARM-based products like the NVIDIA Tegra 2 address many of the performance deficiencies of the Freescale i.MX515. Incorporating two ARM Cortex-A9 cores (more specifically, two ARM Cortex-A9 MPCore processors), a vastly more powerful GPU and support for DDR2-667 (although still constrained to 32-bit access), the Tegra 2 will doubtlessly prove to be highly performance competitive with the Intel Atom, at least on integer-based tests. Regarding the Cortex-A8’s biggest weakness, ARM representatives told us its successor, the Cortex-A9, “has substantially improved floating-point performance.” NVIDIA’s CUDA will eventually also help boost floating-point processing speed on certain chores.

Unmatched software support has always been the “ace in the hole” for the x86 contingent. However, with the success of Linux and the maturity of its underlying and critical GNU development toolset, Linux/GNU support could be the great equalizer that allows ARM to finally overcome the x86 stranglehold in netbooks and even notebooks and desktops. Maturing Linux support might also assist ARM chips to make further incursions into gaming devices.

I didn’t expect it, but the emerging war between ARM and x86 microprocessors is turning out to be much more competitive and interesting than I ever imagined.

In addition to the main ARM versus x86 focus of this report, there is also a subplot pitting the new Intel Atom N450 against the new VIA Nano L3050. The Intel Atom N450 is a remarkable product in that it is the first x86 SoC (system-on-chip) that is suitable for smartphones and other ultra-low power environments. As such, the Atom promises to dramatically improve the sophistication and performance levels of those market spaces.

While the various Atom models currently dominate the booming netbook market, it is evident from our JavaScript tests that the VIA Nano L3050 is much more desirable if JavaScript performance is important at all. Across our JavaScript benchmark results, the 800MHz VIA Nano L3050 is about 50 percent faster than the 1GHz Intel Atom N450.

However, VIA still lags Intel in terms of suitability for low power consumption environments, largely because Intel leverages its outstanding 45nm fabrication technologies with the Atom, while VIA still produces the Nano L3050 in the relatively elderly 65nm Fujitisu process node. The Atom is also strong on multithreaded tasks as demonstrated by its CoreMark victory. HyperThreading will also benefit Atom in I/O intensive environments where the single-core Nano will be hard-pressed to keep up.

Lastly, the AMD Mobile Athlon in this comparison gives us important insight into how the new chips from Intel, VIA and ARM stack up historically. Overall, across all of our performance tests, the ancient Barton core-based Athlon came in a very close second behind the VIA Nano L3050. This suggests AMD could easily produce a competitive low power CPU if the chipmaker did nothing else but shrink one of its older core designs while adding a few power saving tweaks.

In summary, ARM is positioned very well to engage in battles with the Intel Atom as that x86 chip advances into smartphones. The ARM Cortex-A8 appears to use much less power than the Atom, while often delivering comparable integer performance. Nevertheless, the Atom is significantly faster overall when considering holistic system performance, but that performance will be accompanied with a battery life penalty and significantly more heat production. Heat is a serious problem within the tight confines of mobile phones.

New chips based upon ARM Cortex-A9 derivatives, like the NVIDIA Tegra 2, address many of the performance weaknesses we encountered with the Freescale i.MX515. If ARM is to achieve sustained victories in the netbook space – let alone in the more performance demanding notebook and desktop spaces – ARM must substantially improve floating-point thoughput.

While the dedicated functional block approach used by ARM and its legions of licensees to provide image manipulation, video decoding/encoding, security and Java acceleration is still valid, it is not a substitute for double-precision floating-point performance.

ARM representatives told us for this report that the Cortex-A9 “has substantially improved floating-point performance.” It will take a big jump forward to catch their x86 rivals, but if ARM pulls it off, Intel, AMD and VIA are going to have a big, bloody war on their hands. It is conceivable the x86 empire might finally see the boundaries of its swelling, vast territories begin to retract in the near future under an army ant-like assault of tiny, fast, cheap, multi-core ARM microprocessors coming at them from dozens of different companies.

ARM’s success might also have a negative impact on Microsoft, since Linux will almost certainly play a major role in ARM’s ability to storm the netbook, “nettop,” notebook and even desktop spaces.

Whatever the outcome, it’s time to pay attention to ARM. Our results clearly demonstrate how it was possible for an ARM chip to steal the Apple iPad away from Intel’s Atom. The Apple iPad might represent merely the first of many ARM victories in its escalating war against the x86 world.

We thank Katie Traut and Phillipe Robin from ARM for the impressively tiny but full featured Freescale i.MX515-powered Pegatron prototype Ubuntu system. We also thank C.J. Holthaus and Glenn Henry from Centaur Technology for the VIA Nano L3050 reference board.

Last summer after eight years there, Van Smith left his job at Centaur Technology to form the company Cossatot Analytics Laboratories. Van was head of benchmarking for Centaur and represented VIA Technologies within the BAPCo benchmark consortium. Van has written a number of computer benchmarks including OpenSourceMark and miniBench and he has influenced or directly contributed to many others. For instance, Van wrote the cryptography tests in SiSoftware Sandra.

Nearly ten years ago, Van departed Tom’s Hardware Guide as Senior Editor to form his own website, Van’s Hardware Journal (VHJ). Van was recently interviewed and quoted in a CNN article based upon his investigative journalism published at VHJ. Van also served as Senior Analyst for InQuest Market Research.